AI is transforming how the firms automate processes, assist the customers and derive insights. Yet even the most advanced AI systems occasionally generate information that is incorrect, fabricated, or not grounded in real data. These errors are known as AI hallucinations that represent one of the most critical challenges in enterprise AI adoption.

This article provides a technical yet accessible overview of AI hallucinations: what they are, why they occur, and how organisations can systematically reduce them.

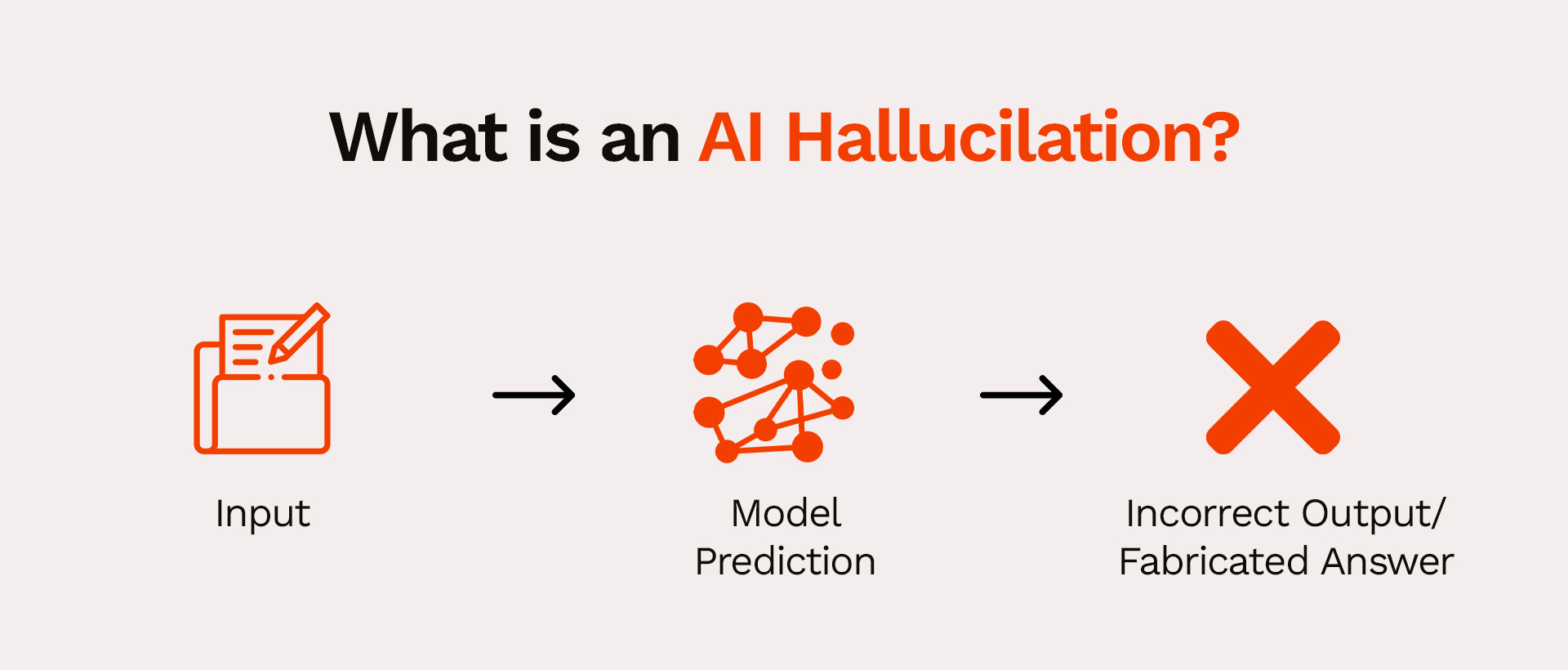

AI hallucinations occur when a model produces factually incorrect or invented content while presenting it confidently and coherently. Basically the data which is not based on facts.

These outputs may include:

Hallucinations arise because LLMs generate probabilistic text and not verified truth. They predict the next likely word based on patterns and not factual accuracy.

AI hallucinations stem from the inherent design of large language models and the data they are trained on. Below are the most validated reasons behind hallucinations:

AI models learn from massive datasets containing text from the internet, articles, repositories, and documentation. If:

The model fills the gap by generating plausible sounding but incorrect information.

LLMs operate on probability distribution. When unsure, the model still attempts to respond because its objective is to generate coherent text and not to guarantee accuracy.

3. Ambiguous or Broad Prompts

Poorly defined queries force the model to make assumptions. Prompt Engineering is one of the key skills that every person who is using AI models must know.

Wrong prompt: Tell me about our company’s cloud security architecture.” (AI doesn’t have organisational context and may invent details.)

Right prompt: “Summarise the cloud security architecture from our internal document: CloudSecurity_Architecture_v4.pdf.”

If you want to know more, below is the link to my Prompt Engineering blog article.

https://midcai.com/post/prompt-engineering-in-salesforce-ai

One of the most common and often misunderstood reasons behind AI hallucinations is that most AI models do not automatically connect to an organization’s live systems or proprietary knowledge sources.

Even advanced models like GPT, Claude, or Gemini operate primarily on the information they were trained on. This training data, though extensive, is static, meaning it does not continuously update itself with real-time business information.

Because of this, AI systems do not inherently have access to:

Without grounding, they generate answers based only on training data.

LLMs lack an internal “truth verification mechanism.” Unless explicitly layered with retrievers, fact-checkers, or enterprise data connectors, they act as language generators, not knowledge retrieval systems.

While AI hallucinations might seem harmless in everyday, casual interactions, they carry significant operational, financial, and reputational risks inside an enterprise environment. As businesses increasingly rely on AI to automate processes, support customers, and assist employees, the margin for error becomes smaller and the impact of incorrect AI-generated output becomes much larger.

AI hallucinations can result in material consequences across multiple functions:

Hallucinated responses in chatbots or support flows can lead to customers receiving wrong instructions, inaccurate troubleshooting steps, or false commitments that directly impact customer experience and trust.

When AI-generated suggestions drive IT workflows (like device provisioning, access requests, or configuration changes), an inaccurate output can cause system misconfigurations, service outages, or security gaps.

If an AI system incorrectly summarizes or interprets internal policies, regulatory frameworks, or compliance mandates, it can lead employees to take actions that violate governance requirements.

Unverified AI summaries may misinterpret clauses, omit obligations, or generate inaccurate risk assessments; especially risky for procurement, legal, and vendor management teams.

When hallucinations seep into analytical outputs or decision-support systems, leaders might make strategic decisions based on fabricated or distorted information.

If an AI-generated blog, email, or customer communication contains incorrect facts, outdated data, or invented claims, it can harm brand credibility; especially in highly visible digital channels.

Industries such as BFSI, Healthcare, Pharmaceuticals, Insurance, and Government face magnified consequences because the accuracy of information is directly tied to regulatory compliance. In these sectors, hallucinations may lead to:

For such organisations, AI hallucinations are not merely a technical issue but they are a governance and risk management priority.

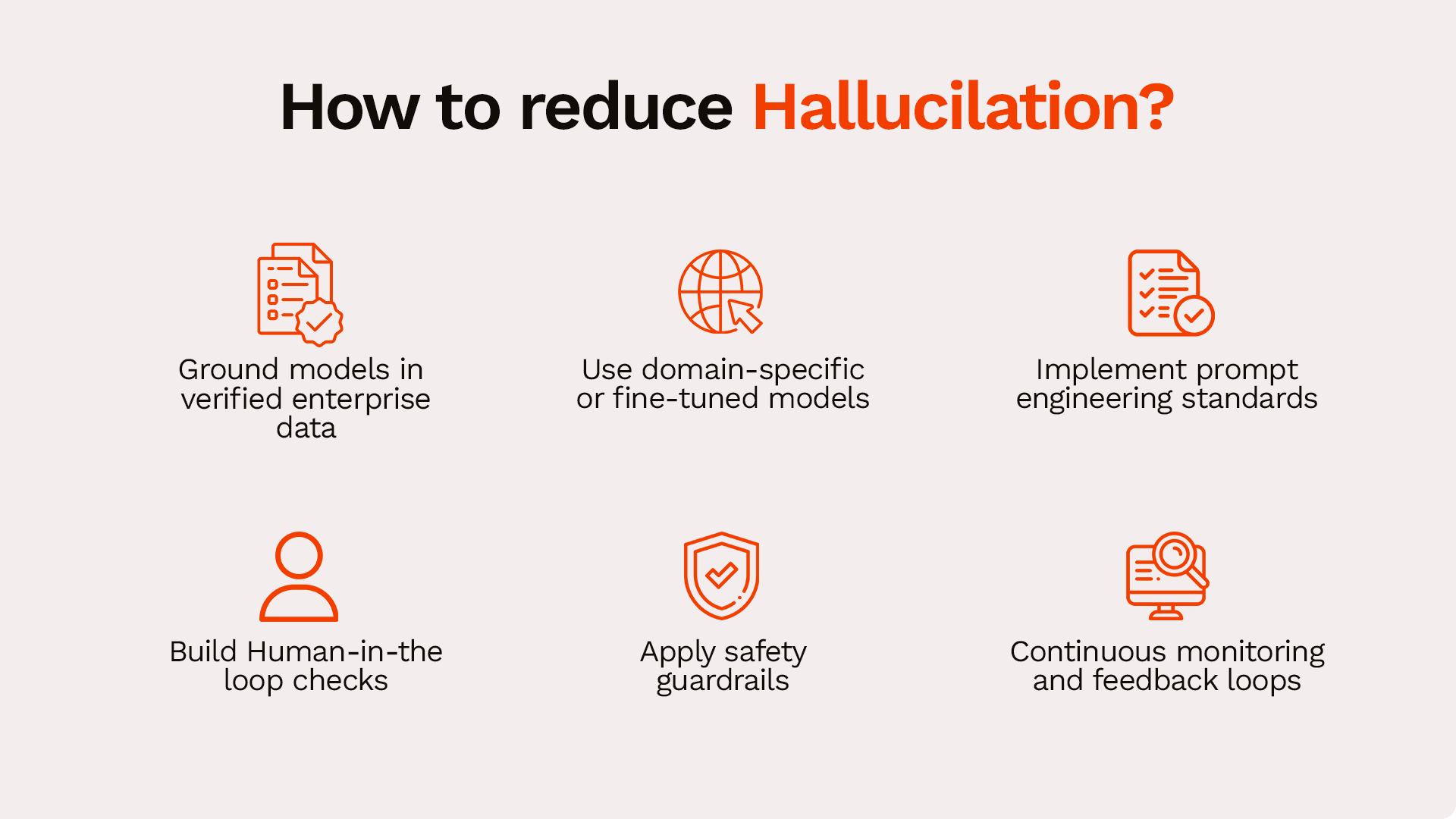

Hallucinations cannot be fully eliminated due to the probabilistic nature of LLMs. However, organisations can significantly reduce them through responsible architecture and governance.

Retrieval-Augmented Generation (RAG) ensures that AI responses are backed by trusted organisational data rather than relying solely on the model’s internal training patterns.

With RAG, the AI:

Typical data sources connected in enterprise RAG pipelines:

By grounding every response in authenticated data, organisations significantly reduce hallucinations across support, sales, and operations workflows.

2. Use Domain-Specific or Fine-Tuned Models

Generic models are broad and powerful, but they lack depth in specialised domains. Domain-specific models — or fine-tuned versions trained on curated datasets — show substantially lower error and hallucination rates.

Examples:

These models are more context-aware, reduce ambiguity, and offer higher factual precision.

Define prompt guidelines for teams:

4. Build Human-in-the-Loop (HITL) Checks

For critical workflows:

This is essential for legal, compliance, IT, HR, and customer communications.

Guardrails help control or limit the AI’s response style:

Platforms like Salesforce Einstein, Azure OpenAI, and AWS Bedrock provide built-in guardrails.

A link to refer for learning more about Salesforce Einstein Trust Layer : https://www.salesforce.com/products/einstein-ai/

6. Continuous Monitoring & Feedback Loops

Hallucination reduction is not a one-time activity. It requires:

Organisations that treat AI like a “living system” maintain the highest reliability.

Industry leaders are investing in hallucination reduction at the platform level.

These advancements make enterprise AI significantly more trustworthy than consumer-facing AI models.

AI hallucinations are not defects. They are an inherent outcome of how generative models predict language. But with the right combination of architecture, data discipline, governance, and oversight, organisations can minimise them to levels that are operationally safe and trustworthy.

A mature AI ecosystem is built on balance:

When these elements come together, AI becomes more than a tool. It becomes a reliable partner in decision-making, customer service, operations, and innovation. Organisations that invest in responsible AI practices not only reduce risk but also unlock the full potential of AI to drive productivity, efficiency, and competitive advantage without compromising on truth or trust.

Get in touch with us for any enquiries and questions

Define your goals and identify areas where technology can add value to your business

We are looking for passionate people to join us on our mission.

where your skills fuel innovation and your growth powers ours